Firefox refuses to use hardware video decoding on MacOS

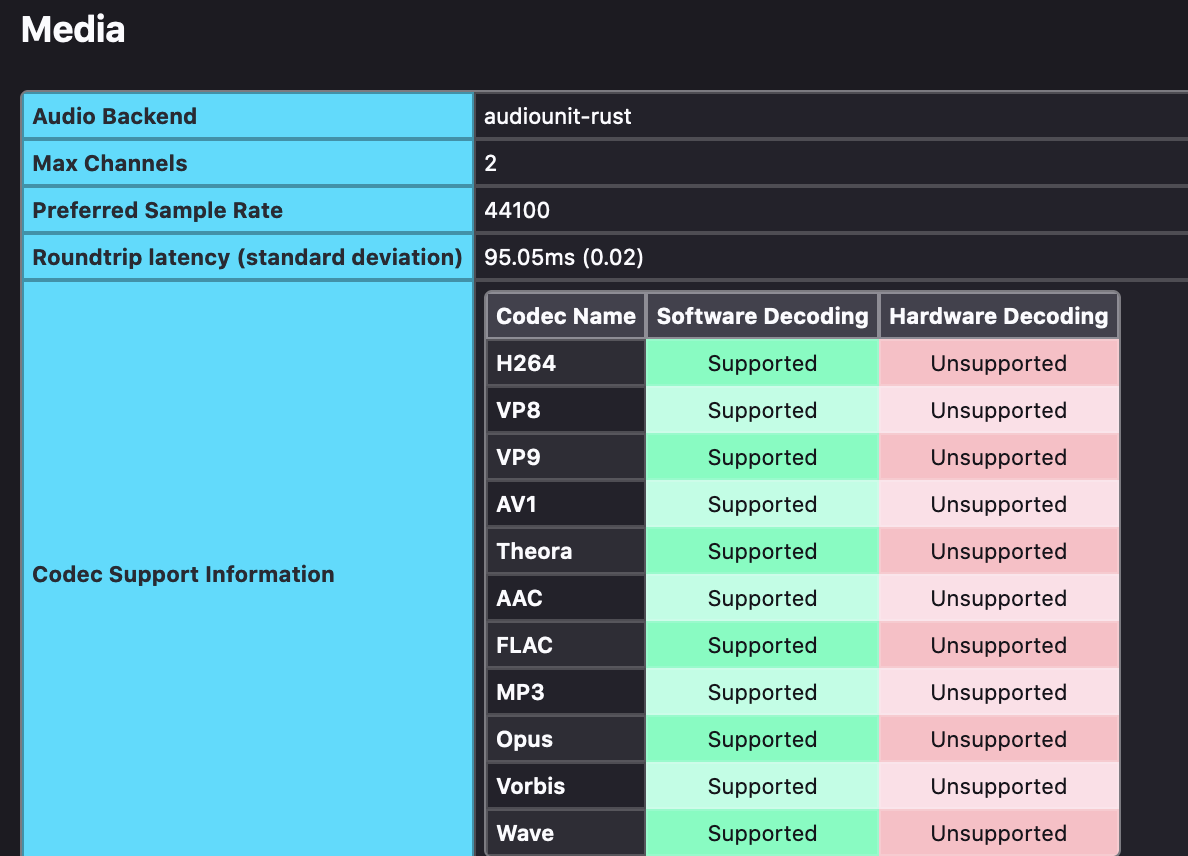

Whenever I play a YouTube video, the fans start spinning and Firefox uses over 100% CPU, most of which is from the media decoding thread. This problem does not occur in Google Chrome. Looking at the section in the troubleshooting info about media decoding, it seems like none of the media formats have hardware decoding enabled, but I have no clue why. I'm confident that the computer does support hardware decoding for them. I have a 2019 MacBook Pro.

I have tried creating a fresh clean Firefox profile but it does not help. Any other clues on how to troubleshoot this?

All Replies (2)

Does it make any difference if you go to about:config and change layers.acceleration.force-enabled and media.hardware-video-decoding.force-enabled to true then restart the browser?

@zeroknight I think it might be helping, but I'm not 100% sure. There is still a significant cpu usage by the data decoder thread, but it seems to be lower. I don't know what cpu usage I should expect from "Data Decoder" when hardware decoding is being used.

An issue I have with debugging this is that I haven't found a way to know for sure if hardware decoding is used or not, other than guessing from CPU usage. Do you know how to determine if hardware decoding is being used or not?

edit: nope, it doesn't seem to have helped

Modified